Master Thesis

Hierarchical Reinforcment Learning for Agile Loco-manipulation

Master’s Thesis — Hierarchical Deep Reinforcement Learning for Loco-Manipulation

This master’s thesis was conducted at Regelungstechnik, TU Dortmund University and at the former Department of AI and Autonomous Systems, Fraunhofer IML. The research was carried out as an independent thesis under the supervision of Prof. Dr. Frank Hoffmann and Dr. Julian Eßer.

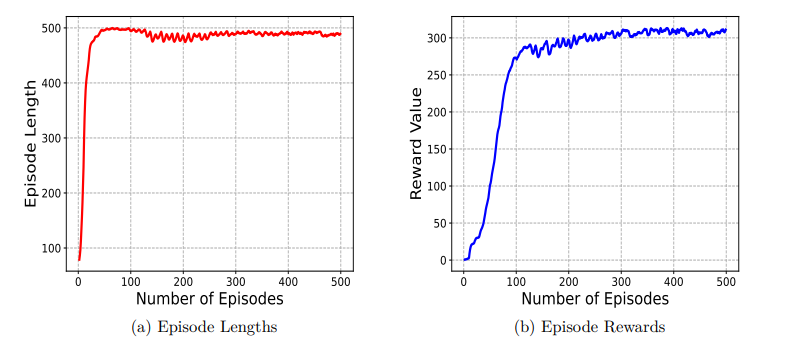

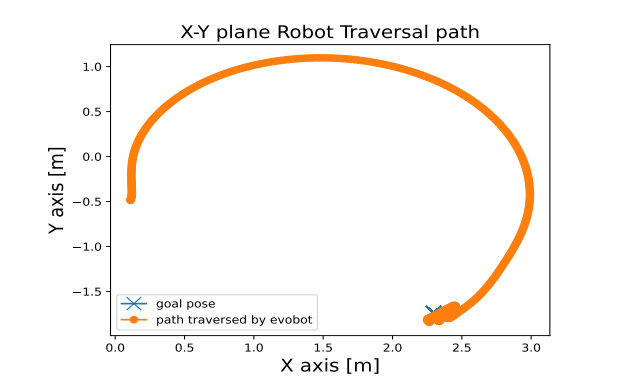

The thesis introduces a novel hierarchical deep reinforcement learning methodology for end-to-end robot learning. A two-level policy architecture—comprising high-level decision-making and low-level control policies—is employed to enable autonomous robot navigation and is further extended to loco-manipulation tasks.

The experimental platform is the evoBOT, a robot characterized by highly complex and difficult-to-model dynamics. Training was performed using a GPU-accelerated deep reinforcement learning pipeline in NVIDIA Isaac Sim, as part of OmniIsaacGymEnvs (Isaac Lab).

The primary objective of the thesis was to develop a fully autonomous pick-and-place solution in which the evoBOT learns to transport an object between two locations using only onboard IMU data, without any vision or external sensing. This sensing constraint, combined with the robot’s complex dynamics, makes the problem particularly challenging and distinguishes the work from conventional vision-based approaches. The results demonstrate that hierarchical policy learning enables robust coordination of navigation and manipulation behaviors under these constraints.